Table of Contents

C# Multi-Layer Perceptron

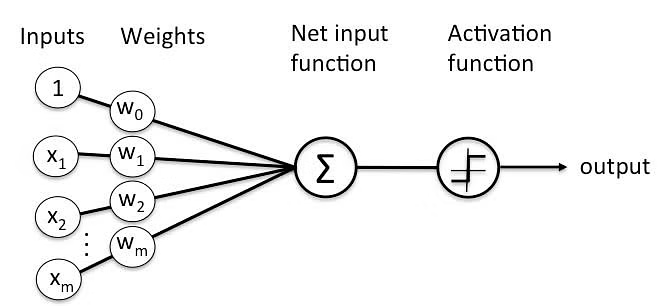

The perceptron, which is the basic building block of the neural network, was invented by Frank Rosenblatt in January 1957 at Cornell Aeronautical Laboratory, Inc at Buffalo, New York. This invention was the result of research titled “The perceptron — A perceiving and recognizing automaton.”

What is a perceptron in simple terms?

A Perceptron is a neural network unit that does certain computations to detect features or business intelligence in the input data. It is a function that maps its input “x,” which is multiplied by the learned weight coefficient, and generates an output value ”f(x).

Fundamental Perceptron Math

The math is in three parts;

Firstly the Feedforward where the summation of each weight multiplied by the input value is then added to a bias value.

y_{in}=b+X_1W_1+X_2W_2+X_3W_3+....Next the activation or squishing function to bring the Yin into a 0,1 range.

S(x)=\frac 1 {1+e^{-x}}Finally calculating the Weights Delta Change, where Alpha is the learning rate, t is the target and x is the input value.

\triangle W_1=\alpha tx_1 \\ \triangle W_2=\alpha tx_2 \\ \triangle W_3=\alpha tx_3 \\ \triangle W_4=\alpha tx_4 \\ \triangle b = \alpha t

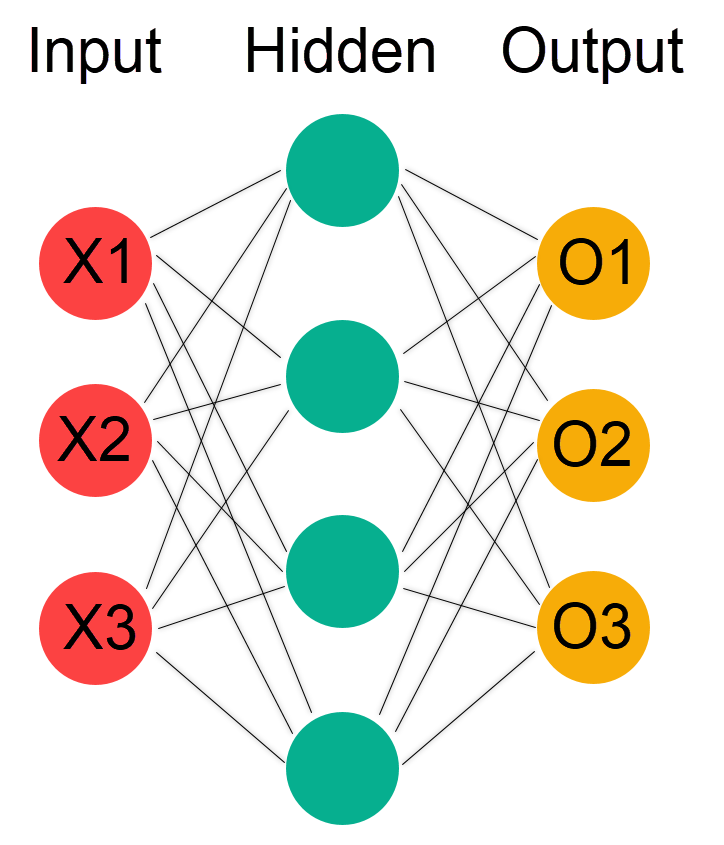

Implementing the Network

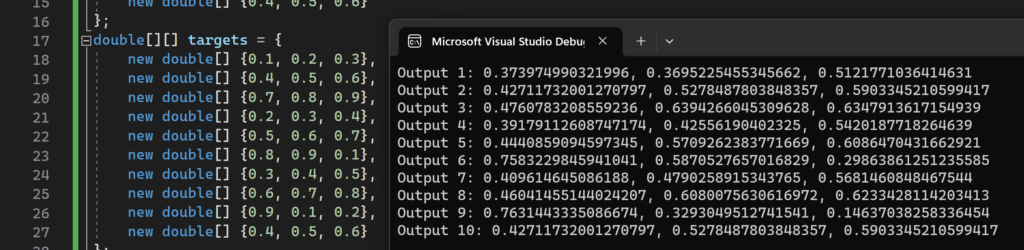

To execute this simple neural network we need an array of training data in this case we have a training set of ten, 3 input samples. We also have a set of ten, 3 output samples. These describe the inputs along with their expected outputs. We can now create the neural network which requires 4 parameters to be passed;

- The Number of Input Nodes

- The Number of Nodes in the Hidden Layer

- The Number of Output Nodes

- The Learning Rate

The network we will create is as shown below where we have 3 inputs, 3 outputs and a hidden layer containing 4 nodes.

Next we can iterate forwards and backwards through the network 10,000 times each time refining the weights and hopefully arrive at a set of values that allow the network to generate output values that are close to the required values, based on the training data and the expected outputs.

Finally well print out the calculated outputs so we can see how close the network is to deriving the expected outputs.

By massaging the number of hidden layers, and the learning rate we can optimize the network to produce the expected output. once this is achieved we can feed the network a new set of data so it can calculate a new set of values.

using Perceptron;

double[][] inputs = {

new double[] {0.1, 0.2, 0.3},

new double[] {0.4, 0.5, 0.6},

new double[] {0.7, 0.8, 0.9},

new double[] {0.2, 0.3, 0.4},

new double[] {0.5, 0.6, 0.7},

new double[] {0.8, 0.9, 0.1},

new double[] {0.3, 0.4, 0.5},

new double[] {0.6, 0.7, 0.8},

new double[] {0.9, 0.1, 0.2},

new double[] {0.4, 0.5, 0.6}

};

double[][] targets = {

new double[] {0.1, 0.2, 0.3},

new double[] {0.4, 0.5, 0.6},

new double[] {0.7, 0.8, 0.9},

new double[] {0.2, 0.3, 0.4},

new double[] {0.5, 0.6, 0.7},

new double[] {0.8, 0.9, 0.1},

new double[] {0.3, 0.4, 0.5},

new double[] {0.6, 0.7, 0.8},

new double[] {0.9, 0.1, 0.2},

new double[] {0.4, 0.5, 0.6}

};

NeuralNetwork nn = new NeuralNetwork(3, 4, 3, 0.01);

for (int i = 0; i < 10000; i++)

{

for (int j = 0; j < inputs.Length; j++)

{

double[] output = nn.FeedForward(inputs[j]);

nn.Backpropagate(inputs[j], targets[j]);

}

}

for (int i = 0; i < inputs.Length; i++)

{

double[] output = nn.FeedForward(inputs[i]);

Console.WriteLine("Output " + (i + 1) + ": " + string.Join(", ", output));

}Neural Network

The code below builds the network and provides methods for forward and backward propagation. in this network were using the sigmoid activation function, this can be replaced by Relu or another one.

public class NeuralNetwork

{

private int numInputs;

private int numHidden;

private int numOutputs;

private double[,] weightsInputHidden;

private double[,] weightsHiddenOutput;

private double[] hiddenLayer;

private double[] outputLayer;

private double[] hiddenLayerError;

private double[] outputLayerError;

private double learningRate;

public NeuralNetwork(int numInputs, int numHidden, int numOutputs, double learningRate)

{

this.numInputs = numInputs;

this.numHidden = numHidden;

this.numOutputs = numOutputs;

this.weightsInputHidden = new double[numInputs, numHidden];

this.weightsHiddenOutput = new double[numHidden, numOutputs];

this.hiddenLayer = new double[numHidden];

this.outputLayer = new double[numOutputs];

this.hiddenLayerError = new double[numHidden];

this.outputLayerError = new double[numOutputs];

this.learningRate = learningRate;

RandomizeWeights();

}

private void RandomizeWeights()

{

Random r = new Random();

for (int i = 0; i < numInputs; i++)

{

for (int j = 0; j < numHidden; j++)

{

weightsInputHidden[i, j] = r.NextDouble();

}

}

for (int i = 0; i < numHidden; i++)

{

for (int j = 0; j < numOutputs; j++)

{

weightsHiddenOutput[i, j] = r.NextDouble();

}

}

}

public double[] FeedForward(double[] inputs)

{

for (int i = 0; i < numHidden; i++)

{

double sum = 0;

for (int j = 0; j < numInputs; j++)

{

sum += inputs[j] * weightsInputHidden[j, i];

}

hiddenLayer[i] = Sigmoid(sum);

}

for (int i = 0; i < numOutputs; i++)

{

double sum = 0;

for (int j = 0; j < numHidden; j++)

{

sum += hiddenLayer[j] * weightsHiddenOutput[j, i];

}

outputLayer[i] = Sigmoid(sum);

}

return outputLayer;

}

private double Sigmoid(double x)

{

return 1 / (1 + Math.Exp(-x));

}

public void Backpropagate(double[] inputs, double[] targets)

{

for (int i = 0; i < numOutputs; i++)

{

outputLayerError[i] = (targets[i] - outputLayer[i]) * outputLayer[i] * (1 - outputLayer[i]);

}

for (int i = 0; i < numHidden; i++)

{

double sum = 0;

for (int j = 0; j < numOutputs; j++)

{

sum += outputLayerError[j] * weightsHiddenOutput[i, j];

}

hiddenLayerError[i] = sum * hiddenLayer[i] * (1 - hiddenLayer[i]);

}

for (int i = 0; i < numHidden; i++)

{

for (int j = 0; j < numOutputs; j++)

{

weightsHiddenOutput[i, j] += learningRate * outputLayerError[j] * hiddenLayer[i];

}

}

for (int i = 0; i < numInputs; i++)

{

for (int j = 0; j < numHidden; j++)

{

weightsInputHidden[i, j] += learningRate * hiddenLayerError[j] * inputs[i];

}

}

}

}Network Output

Since the weights are initially randomized each code execution will produce a different result, and the code may have to be executed several times until an optimized network is defined. In the next post I will add a way in which the network can be stored as a json file and restituted back into the network.